This is the conference that almost 20 years ago inspired me to throw myself wholeheartedly into the web tech industry as a freelance web designer / front end developer. Web Directions conferences have been a mainstay of my professional development ever since, and these days I’m very grateful for that.

From the start, these conferences placed an explicit emphasis on web standards, usability and ethical practice, and they’ve never wavered in that commitment. That meant I was paying attention to accessibility as a matter of course, right from the start of my fledgling career. Almost 20 years later, it’s resulted in my committed focus on digital accessibility and building a professional profile in that area.

Until recently, Covid had limited my conference attendance to digital participation, but this year marked my return to Summit with a physical presence, enabled by the support of TPGi.

Because of my role as a Technical Content Writer at the Knowledge Center of one of the largest digital accessibility agencies in the world, I approached this conference a little differently. Where previously I was just interested in anything to do with web design, front end dev, user experience, content strategy and accessibility, this time I was looking specifically for anything on digital accessibility, artificial intelligence and, especially, the intersection of the two.

For that purpose, Summit was ideal. As the conference blurb said, “Generative AI will be central to the conversation at Web Directions Summit”. Combine that with an ongoing commitment to promoting accessibility, and I had rich pickings.

Day One Opening Keynote: The Mediated Web

Rupert Manfredi, Designer, Adept

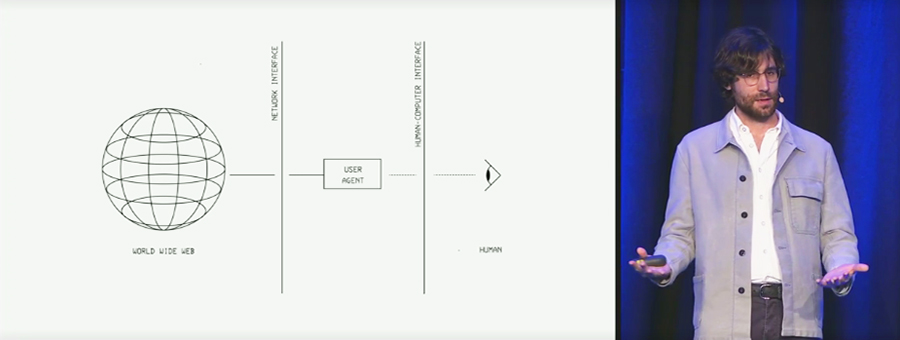

Rupert predicts that, before long, web content will not just be rendered by user agents like browsers but will be further mediated by AI-based agents that will interpret content and operate functions to suit each individual user, a human-computer interface interpreting the designed user interface to deliver mediated content. His talk was informed by his experience at Mozilla Innovation Studio, where he created LangView, an experimental library for language models to respond with graphic user interfaces, and he plans to continue exploring human-computer interfaces at Adept.

Important questions he raised included how a mediated web might affect the open web, and how designers can ensure that personal computing does not become inhumane, inaccessible and controlled by gateways that make it prohibitively expensive.

I was fortunate to have a chat with Rupert after his talk, where I put to him that for people with disabilities, some assistive technologies were already creating a kind of mediated web, such as screen readers that could be configured to deliver personalized versions of web content in ways that are accessible to, for example, blind users. I also noted some AT is expensive while some is free, adding another layer of differentiation in who gets to access what. He was very interested in this perspective, and we agreed to keep in touch.

My takeaway was that, to some extent, the mediated web is already present for people with disabilities and AI represents a tool that can be used to enhance access to web content or inhibit it through new human-computer interfaces.

Equitable Algorithms: Designing AI for Positive Impact

Aubrey Blanche-Sarellano, CEO, The Mathpath

Aubrey’s focus was ensuring that AI is used in product design in such a way so as to support the principles of diversity, equity, and inclusion. This means using AI in a human-centered way that is reflective and respectful of lived experience outside the “typical” or “average”. There was practically-focused exploration of what elements of DEI might be impacted by AI that is applied without due consideration, and how these might be identified, addressed and countered.

In the Q&A, I asked about the specific implications of AI on digital accessibility for people with disabilities, and Aubrey responded that these presented important and potentially critical considerations, regarding limited data sets, bias against disability and the potential increased marginalization of people with disability.

My takeaway was to not assume that AI comes equipped with awareness of the full range of human experience, and that we need to build into AI tools opportunities to check that its operations and output are diverse, equitable and inclusive.

The Art of Product Discovery

Mark Boehm, Head of Design and Strategy, Palo IT

Mark offered a view of product design that placed a greater value on discovery in the product design process. Rather than following a regimented process whereby there is a an “ideas” phase followed by a “development” phase, or even the more agile process of generating ideas and development in a cyclical fashion, this approach encouraged an attitude of discovery to infuse all phases of research and development. This could take different forms in different product design processes, drawing inspiration from the child-like enthusiasm for having an idea, exploring how to make it a reality, and bring it to fruition – or, if necessary, writing it off, all based on what is discovered during the process.

My takeaway was to pursue new ideas and keep an open mind to discovery, to seek different inputs based on user research, and to keep evolving and be prepared to change direction in product design, including digital accessibility.

Five Fundamental Principles of Inclusive Research

Bri Norton & Irith Williams, OZeWAI

Bri and Irith focused on conducting user research operations that included people with disabilities in an inclusive and positive way. The five principles focused on proceeding with an awareness and understanding of the perspectives of people with disabilities, including limitations that might be created by their disabilities. They ranged from issues of respect for what people with disabilities bring to user research operations and the practicalities involved.

My takeaway was that we need to properly understand how people with lived experience of disability can and should inform user research, and put into practical context what is required for them to participate fully.

Taking advantage of AI to help you write code

Lachlan Hunt, Senior Developer, Atlassian

Lachlan provided a brief overview of potential benefits of using AI in coding, including automating procedures, producing templated code, and in linting and checking procedures. He included an illuminating exercise that involved used a range of AI to produce code with a specific intent, namely to merge the Fibonacci number sequence with FizzBuzz (in which the numeral 3 is replaced by the word “fizz” and the numeral 5 is replaced by the word “buzz”). While the purpose and content was light-hearted, what was interesting was that each AI produced very different code based on varying methodologies to produce the desired output. Some AI produced very accurate output, some much less so, and some missed the point altogether.

My takeaway was to not assume all AI will produce the same output, or even reliable output, and to carefully refine instructions provided to AI.

Annotating Designs for Accessibility

Sam Hobson & Claire Webber, Digital Accessibility Consultants, Intopia

Sam and Claire explored the idea and practicability of using markup annotations to guide designers and front end developers to ensure accessible design products. While systems like Figma allow for this kind of annotation, and there as agreement it was fundamentally helpful, it was also acknowledged that the practice had the potential to be overused and thus counter-productive. The sheer number and repetitiveness of annotations may put some designers off paying them proper attention, while some more experienced designers might resent being told what they feel they already know.

My takeaway was to consider how best to use annotations in digital accessibility guidance and apply them with moderation to achieve specific outcomes.

Day One Closing Keynote: The Expanding Dark Forest and Generative AI

Maggie Appleton, Product Designer, Ought

The dark forest that Maggie refers to is the idea that the web has become a place so populated by bots, trolls, advertisers, and junk that real people stay out of sight for fear of being attacked or bombarded, hiding in private chat rooms, Slack channels, and groups. Maggie’s fear is that this will only get worse as publicly available web content becomes dominated by AI-generated text, images and video.

It will become harder to tell human-generated content from machine versions, and the value of all web content will suffer as a result. While this was delivered in a light-hearted way, and she explored possible ways of countering the negative effect, there was an underlying sense that this was already happening and, to some extent, inevitable.

My takeaway was that the dark forest effect could become worse with the increasing use of AI-generated content unless the web industry acted to counter it, and that this would have a significant effect on people with disabilities who rely on the web for human contact and content.

Maggie’s essay of early 2023, the video of her talk based on that essay at another conference, plus references & slides are available online.

Day Two Opening Keynote: Steam Engine Time

Mark Pesce, broadcaster and futurist

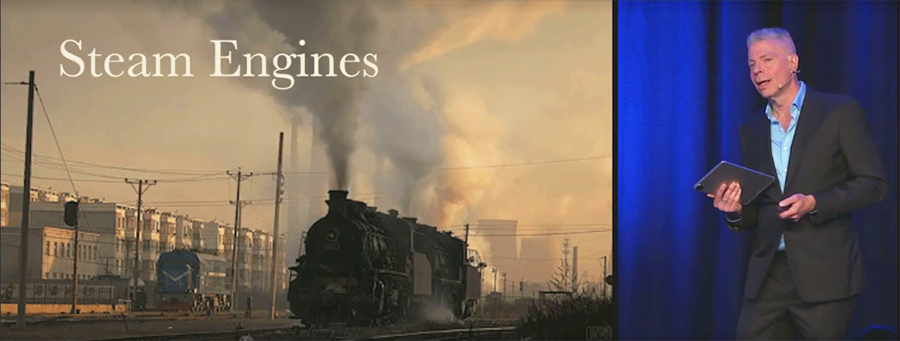

The title of Mark’s talk refers to his proposition that we are still in the early days of AI, and its benefits and limitations are analogous to those we experienced in the age of steam power. Having started his talk by making clear that there really is no such thing as “artificial intelligence” – by definition intelligence cannot be artificially derived – Mark explained how human consciousness and cognition evolves from birth to become more complex and refined over time.

He suggested that what we call AI still has a great deal of evolution ahead of it, not unlike how clumsy – albeit revolutionary – steam power was in its day before giving way to more complex, streamlined and effective technologies.

My takeaway was that there is opportunity ahead to manage the evolution of AI and its role on the web and in day to day life, if we approach it without fear but with care and caution.

Design + AI = Good or Bad?

MC Monsalve & Phil Banks, Product Design Team Lead & Design Lead, ABC iView

MC and Phil focused on how the principles of AI could support the ABC, Australia’s national radio and television broadcaster, in enhancing user experience. With its multiple outlets in free-to-air national TV broadcasting, live streaming, video on demand, national and local radio broadcasting, podcasting, and apps, the ABC carries a responsibility as a publicly funded entity to provide content in metropolitan, regional and remote parts of Australia to all ages from the very young to the very old, but to do this in a way that satisfies the customized and personalized demands of its individual users. AI is a necessary and usable tool to meet these demands. It is being used now in various ways and its use will continue to grow. It’s therefore important to ensure that AI is used accurately, ethically and responsibly, and the ABC aims to be a leader in this.

My takeaway was that the ABC accepts the responsibility of the national public content network to ensure that AI is used to the overall benefit of its users in every way.

Experimenting with AI at the ABC

Anna Dixon, Senior Service Designer, ABC

Anna explained the activities of the ABC’s Innovation Lab in exploring how AI is being applied to its products. Anna gave examples that focused on accessibility, such as AI-generated transcripts of radio and television presentations and synthesized speech generated from text by AI, as well as more general ways that AI is being used to enhance and customize user experience, such as automated language translation in both text and voice. While user feedback has been very positive regarding these innovations, Anna noted that in most cases, an element of human intervention is still required in the form of checking and editing.

In the Q&A, I asked about other ways in which the Innovation Lab was aiming to enhance accessibility for people with disabilities, and Anna responded with enthusiasm that the Lab is actively exploring the potential for AI image recognition to produce automated audio description and the production of AI-generated automated captions, among other things. She emphasized that their user research included people with disabilities, and reiterated the need for human intervention for accuracy, bias, and quality assurance.

My takeaway was that AI can be used to improve broad user experience, and to allow greater customization to individual user preferences, including to enhance digital accessibility for people with disabilities.

Conversational AI Has a Massive, UX-Shaped Hole

Peter Isaacs, Senior Conversation Design Advocate, Voiceflow

Peter focused on the need to improve the way AI handles conversation, clunky and stilted chatbots being a prime example. Peter sees UX practitioners as the key to making AI voice interfaces more human-like and natural, bringing empathy and user research to the task of training voice AI. At the same time, UX designers need to engage with and understand AI and ML, creating a new skill set around Conversational Design.

In the Q&A, I asked about how, given the apparent “black box” process of using AI, UX practitioners could insert themselves into the process of generating conversational AI, and Peter responded that it has to be at both ends of the process, in influencing the material on which AI is trained before the generation process, and being able to affect the output before it’s made available to humans.

My takeaway was that Conversational AI has significant implications for digital accessibility, with the potential to make user interfaces more accessible to some people with disabilities, and less accessible for others.

Baking Accessibility into Your Design System

Simon Mateljan, Design Manager, Atlassian

Simon gave an overview of how a design system can be constructed and developed to meet digital accessibility needs, and how this is not something that can be applied on top of a design system but needs to be baked in from the start. Simon described how the Atlassian design system applied to a large range of products, which demands a design system that is both consistent and respects individual product specifications. It inevitably requires some education and explanation for its users, and needs to allow for ongoing refinement over time, while remaining true to brand requirements as well digital accessibility standards.

My takeaway was that it is possible to create an accessible design system for a large company with a diverse range of products, if the company chooses to set that as an aim and supports the work required.

WCAG 2.2 – What it Means for Designers

Julie Grundy & Zoë Haughton, Senior Digital Accessibility Consultants, Intopia

Julie and Zoë walked us through the changes to the Web Content Accessibility Guidelines in version 2.2, explaining what each new success criterion is, what it focuses on, and how to test for it. Julie and Zoë explained the success criteria in language designers could understand and provided practical examples.

In the Q&A, I asked about how to manage attitudes from designers that, for example, the new Focus Not Obscured success criteria were redundant because their issues were already covered by Focus Visible. Julie responded that accessibility consultants need to convey that the new success criteria are refinements in addition to Focus Visible, allowing identification of more specific issues and techniques to remediate them. They agreed that this was also an education issue for more experienced accessibility engineers, but noted that ultimately the goal is to fix content accessibility problems and whether that’s done under one SC or another is less important than that they are fixed.

My takeaway was that WCAG 2.2 aims to build on WCAG 2.1 and improve accessibility guidance on issues that relate to users with cognitive disabilities, low vision users, and touch screen interfaces.

Day Two Closing Keynote: All the Things That Seem to Matter

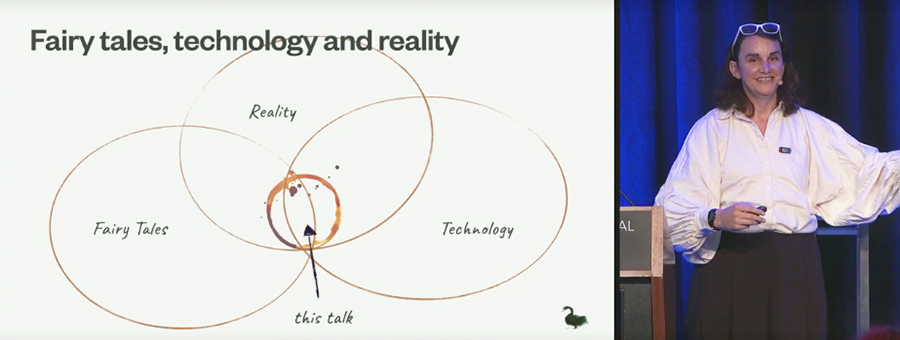

Tea Uglow, Director, Dark Swan Institute

Tea’s talk was an over-arching view of how web technology has evolved and what the future might hold, seen through the lens of a technologist who founded Creative Labs for Google in Sydney and London and has become noted for her work in the intersections of technology, arts and culture. Tea walked us through an anecdotal timeline that gave a perspective on the history of web technology, informed by both her work and personal life, including her gender transition and her LBGTQ advocacy and activism.

Her reflections were deeply incisive and forthright (expletives, ribaldry, and possible libel included), pitching her own experience of how obstructionist working in web tech can be against the ongoing potential for inclusion, liberation and creative development.

My takeaway was that the history of web technology is filled with contradictions, frustrations and creative breakthroughs, and what its future holds largely depends on us, the people who build the web, individually and collectively.

Conclusion

As always at live conferences, I gained as much from the conversations between sessions as from the talks themselves. As well as catching up with my tribe – long term, like-minded web tech friends and colleagues – I also met several new people with whom I engaged to great effect, including some who worked in higher education, design systems, product design, cloud development, content strategy, and, of course, digital accessibility. I’ve no doubt these new connections will be as fruitful and long-lasting as the old tribe.

It’s worth noting that Web Directions makes recordings of all Summit presentations available to attendees post-conference, so while I physically attended seven talks, I’ll be to view videos later of the other 78 across the seven tracks of the conference. This is done via the Conffab archive, which uses the most accessible platform for such a purpose I’ve ever encountered.

Videos have full user controls, closed captions that can be turned on or off, a rolling transcript that is synchronized to the speaker with current sections highlighted, a separate searchable transcript that can be turned on or off, control over video quality and speed, and accessible slides that are also synchronized to the presentation. These people do conference presentations right.

The Conffab library has presentations from more than 40 Web Directions conferences over the last 10+ years, and I believe it’s possible to subscribe without being a conference attendee. Part of the thinking is that people should be able to construct their own personalized conferences consisting of selected presentations.

My thanks go to the Web Directions team for organizing such a brilliant conference, and to TPGi for supporting my attendance. I’m already looking forward to the next one.